Bidirectional Encoder Representations for Transformers (BERT) Simplified

In the past, Natural Language Processing (NLP) models struggled to differentiate words based on context due to the use of shallow embedding methods for text analysis.

Bidirectional Encoder Representations for Transformers (BERT) has revolutionized the NLP research space. It excels at handling language problems considered to be “context-heavy” by attempting to map vectors onto words post reading the entire sentence in contrast to traditional methods in NLP models.

This blog sheds light on the term BERT by explaining its components.

BERT (Bidirectional Encoder Representation from Transformers)

Bidirectional – Reads text from both the directions. As opposed to the directional models, which read the text input sequentially (left-to-right or right-to-left), the Transformer encoder reads the entire sequence of words at once. Therefore, it is considered bidirectional, though it would be more accurate to call it non-directional.

Encoder – Encodes the text in a format that the model can understand. It maps an input sequence of symbol representations to a sequence of continuous representations. It is composed of a stack with 6 identical layers. Each layer has two sub-layers. The first layer is a multi-head self-attention mechanism. And the second layer is a simple, position-wise fully connected feed-forward network. We employ a residual connection around each of the two sub-layers, followed by Layer Normalization. The key feature of layer normalization is that it normalizes the inputs across the features.

Representation – To handle a variety of down-stream tasks, our input representation can unambiguously represent both a single sentence and a pair of sentences, e.g. Question & Answering, in one token sequence in the form of transformer representations.

Transformers – Transformer includes two separate mechanisms — an encoder that reads the text input and a decoder that produces a prediction for the task. Since BERT’s goal is to generate a language model, only the encoder mechanism is necessary.

Transformers are a combination of 3 things:

In this blog, we will only talk about the Attention Mechanism.

Limitations of RNNs over transformers:

- RNNs and its derivatives are sequential, which contrasts with one of the main benefits of a GPU i.e. parallel processing

- LSTM, GRU and derivatives can learn a lot of long-term information, but they can only remember sequences of 100s, not 1000s or 10,000s and above

Attention Concept

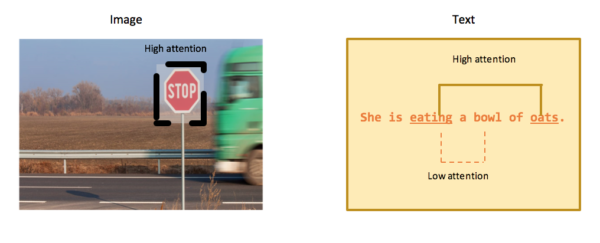

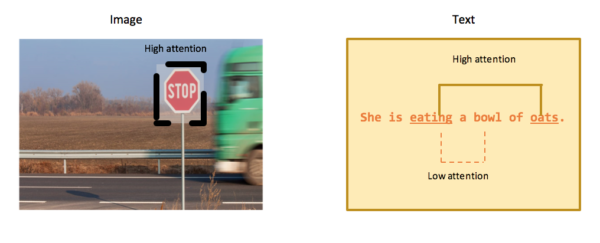

As you can see in the image above, attention must be paid at the stop sign. And for the text, eating (verb) has higher attention in relation to oats.

Transformers use attention mechanisms to gather information about the relevant context of a given word, then encode that context in the vector that represents the word. Thus, attention and transformers together form smarter representations.

Types of Attention:

- Self-Attention

- Scaled Dot-Product Attention

- Multi-Head Attention

Self-Attention

Self-attention, also called intra-attention is an attention mechanism that links different positions of a single sequence to compute a representation of the sequence. Self-attention has been used successfully in a variety of tasks including reading comprehension, abstractive summarization, etc.

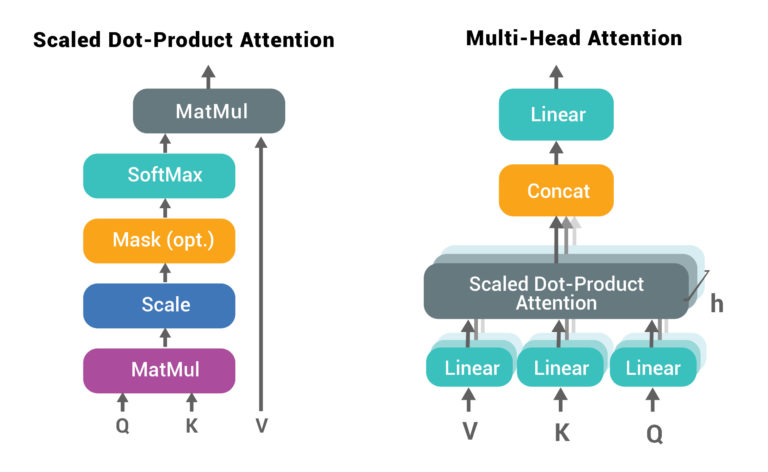

Scaled Dot-Product Attention

Scaled Dot-Product Attention consists of queries Q and keys K of dimension dk, and values V of dimension dv. We compute the dot products of the query with all keys, divide each of them by √dk, and apply a SoftMax function to obtain the weights on the values.

The two most commonly used attention functions are:

- Dot-product (multiplicative) attention: This is identical to the algorithm, except for the scaling factor of √1dk.

- Additive attention: Computes the compatibility function using a feed-forward network with a single hidden layer.

While the two are similar in theoretical complexity, dot-product attention is much faster and more space-efficient in practice as it uses a highly optimized matrix multiplication code.

Multi-Head Attention

Instead of performing a single attention function with dmodel dimensional keys, values and queries, it is beneficial to linearly project the queries and values h times with different, trained linear projections to dk, dk and dv dimensions, respectively. We can then perform the attention function in parallel to each of these projected versions, yielding dv-dimensional output values. These are concatenated and once again projected, resulting in the final values. Multi-head attention allows the model to jointly attend to information from different representation subspaces at different positions.

Applications of BERT

- Context-based Question Answering: It is the task of finding an answer to a question over a given context (e.g., a paragraph from Wikipedia), where the answer to each question is a segment of the context.

- Named Entity Recognition (NER): It is the task of tagging entities in text with their corresponding type.

- Natural Language Inference: Natural language inference is the task of determining whether a “hypothesis” is true (entailment), false (contradiction), or undetermined (neutral) given a “premise”.

- Text Classification

Conclusion:

Recent experimental improvements due to transfer learning with language models have demonstrated that rich and unsupervised pre-training is an integral part of most language understanding systems. It is in our interest to

further generalize these findings to deep bidirectional architectures, allowing the same pre-trained model to successfully tackle a broader set of NLP tasks.