Making our Data Scientists and ML Engineers more Efficient (Part 1)

There is a lot of backend grunt work involved in deploying an ML model successfully before we even begin to realize its business benefits. More than 60-70% of the effort that goes into an ML project involves what we call as the “glue work” – from EDAs to getting the QAed features/analytical dataset ready; to moving the final model to production (btw real-time deployments are a nightmare! ?). This glue work is usually the non-jazzy side of ML, but it’s what makes the models do what they are supposed to do –

- Ensure Reproducible and Consistent Training and Serving Pipelines and Datasets

- Ensure Upstream and Downstream QA for stable Feature Values and Predictions

- Ensure 99.99% Availability

- Sometimes, just re-write the entire ML serving part on a completely different server-side environment

Imagine doing the above time, and again every time a model needs to be refreshed or a new model/use case needs to be trained. The times we are living in are fluid, and so are the shelf lives of our models. The traditional approaches to building and deploying models prove to be a huge bottleneck while building models at scale, simply because there is a substantial amount of glue work.

At Affine, we are inculcating a mindset change (read MLOps) amongst our frontline DS and ML engineers to ensure faster training and deployment of ML models (Continuous Training Continuous Delivery/Deployment) across a variety of use cases.

The key to a successful MLOps implementation begins with a mindset change and motivation to adopt new practices, which may initially look like an overhead for a specific project, but guarantees tremendous gains in efficiency and business value over the mid to long run.

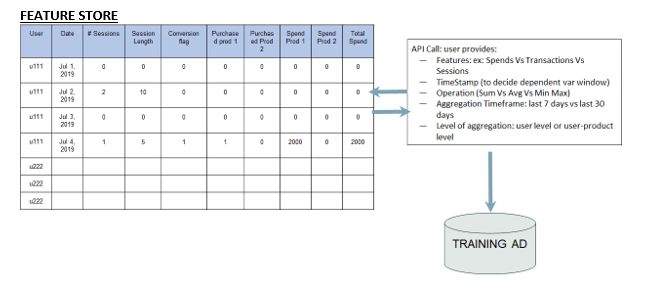

One such practice is the adoption of Enterprise Feature Store. For those familiar with DS/ML terminologies, this essentially is a most comprehensive Analytical Dataset that you can think of – comprises all possible features at the lowest possible granularity (think user-product-day level), and refreshed at a very frequency. Think of it as a living data source that has an ability to serve multiple ML use cases – through a simple API/SDK.

The Feature Store not only facilitates faster creation of Training AD but also Serving/Scoring AD and across multiple use cases within an org function (Ex: Marketing or Supply Chain)

What this means for Data Scientists

- Faster Dev Cycle

- Zero Data Errors: Feature Store being the Single Source of Truth

- Leverage collective intellectual thought leadership from multiple DS who designed those features

What this means for ML Engineers à

- Consistency of Data between Training and Deployment environment

- Faster Deployment in Production

- No loss of information between the DS team and ML engineers

What this means for Business à

- Adaptive Solution means faster and relevant response to market dynamics

- Reusability of components across use cases means standardization and cost-effectiveness

Machine Learning, too many, is the combination of Science and Art. However, we sincerely believe that there is a third component – that of Process. Feature Store is one such ML hygiene practice, and when coupled with a few other elements results in scalable and sustainable ML impact that is very much the need of the world today.

Please stay tuned for more on ML Hygiene ?