The loose definition of data science is to analyze data of a business, to be able to produce actionable insights and recommendations for the business. The simplicity or the complexity of the analysis, aka the level of “Data Science Sophistication” also impacts the quality and accuracy of results. The sophistication is essentially a function of 3 main data science components – technological skills, math/stats skills and the necessary business acumen to define and deliver a relevant business solution. These 3 pillars have very much been the mainstay of data science ever since it started getting embraced by the businesses over the past two decades and should continue to be even in the future. What, however, has changed or will change in the future is the underlying R&D in the areas of technology and statistical techniques. I have not witnessed many other industries where these skills are becoming obsolete at such fast rate. Data Science is unique in its requirement of the data scientist and the consulting firms to constantly update their skills and be very futuristic in adopting new and upcoming skills. This article is an attempt to look at how the tool/tech aspects of data science have evolved over the past few decades, and more importantly what the future holds for this fascinating tech and innovation driven field.

THEN > NOW > LATER

When businesses first started embracing data science, the objective was to find more accurate and reliable solutions than those obtained using business heuristics. At, the same time trying to keep the solutions simple enough so as to not overwhelm the business users. The choice of technology was kept simple for easier implementation/consumption and the same went for math/stat too for easier development and explanation. The earlier use cases were more exploratory than predictive in nature and hence that also impacted the choice of tools/techs. Another important factor was availability in the market in terms of the products and more importantly the analysts with those skills.

SAS, that used to be one of the workhorses of the industry during the 2000s when it came to data processing/EDA jobs, building backend data for reporting and modeling. A few companies used SAS for EDW too which otherwise was dominated by IBM Netezza, Teradata, and Oracle. SPSS found a good use too owing to its easy to use GUI interface as well as the solution suite it offered that included easy to develop (but quite handy) solutions like CHAID/PCA etc.

The so-called “Shallow Learning” techniques were the most common choices (due to the availability of products and resources) when it came to building statistical models. These mostly included linear regression, Naïve Bayes, logistic regression, CHAID, univariate and exogenous time series methods like smoothing, ARIMA, ARIMAX etc. for supervised and K-Means clustering, PCA etc. for the unsupervised use cases. Toolkits like IBM CPLEX or excel solvers were mostly used to address optimization problems due to their ease of implementation.

Reports were mostly developed and delivered on excel and VBA for complex functionalities. Cognos, Micro strategy were some of the other enterprise tools, typically used by large organizations.

Due to the nature of work described above, the skillset required were quite narrow and limited to what was available off the shelf. The data science firms typically hired people with statistics degree and trained them on the job for the required programming skills which were mainly SQL, SAS and sometimes VBA programming.

THEN > NOW> LATER

Python & R are the main technologies for the daily data processing chores for today’s data scientist. They are open source tools, have vast and ever-evolving libraries, and also an ability to integrate with big data platforms as well as visualization products. Both R & Python are equally competent and versatile and can handle a variety of use cases. However, in general, R is preferred when the main objective is to derive insights for the business using exploratory analysis or modeling. Due to its general-purpose programming functionality, Python is typically preferred for developing applications which also have an analytics engine embedded in them. These two are not only popular today but they are here to stay for some more years to come.

An important disrupter has been the area of the distributed processing framework, pioneered by two Apache Open Source Projects – Hadoop & Spark. Hadoop picked up steam in the early 2010s and is still very popular. When it was introduced first, Hadoop’s capabilities were limited when compared to a relational database system. However, due to its low cost, flexibility, ability to quickly scale but more importantly with the development of many maps/reduce based enablers like Hive, PIG, Mahout etc., it started to deliver benefits and is still the choice of technology in many organizations that produce TBs of data daily.

While Hadoop has been a pioneer in the distributed data processing space, it lacked performance when it came to using cases like iterative data processing, predictive modeling/machine learning (again iterative in nature due to several steps involved) and real time/stream processing. This is mainly since MapReduce reads and writes back the data at each step and hence increasing latency. This was addressed with the advent of Apache Spark which is an in-memory distributed framework and holds the data in memory to perform a full operation (the concept of Resilient Distributed Datasets (RDD) makes it possible). This makes it many times faster than Hadoop’s MapReduce based operations for the use cases mentioned before. More imp, it’s also compatible with many programming languages like Scala, Python or Java and hence the developers can use the programming language of their choice to develop a spark based application

The machine learning space also witnessed many advancements with organizations and data scientist using more and more “deeper” techniques. These are far better than the linear and logistics regressions of the world as they can uncover complex patterns, non-linearity, variable interactions etc. and provide higher accuracy. Some of these techniques are captured below

Supervised – GBM, XGBoost, Random Forests, Parametric GAMs, Support Vector Machines, Multilayer Perceptron

Unsupervised – K-nearest Neighbours, Matrix Factorization, Autoencoders, Restricted Boltzmann Machines

NLP & Text Mining – Latent Dirichlet Allocation (to generate keyword topic tags), Maximum Entropy/SVM/NN (for classification for sentiment), TensorFlow etc.

Optimization – Genetic Algorithms, Simulated Annealing, Tabu Search etc.

Ensembling (blending) of various techniques is also being adopted to improve the prediction accuracy by some of the organizations.

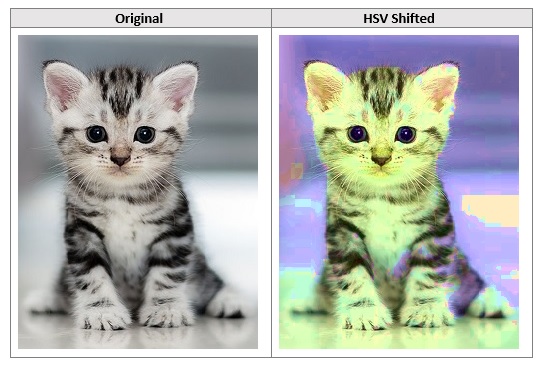

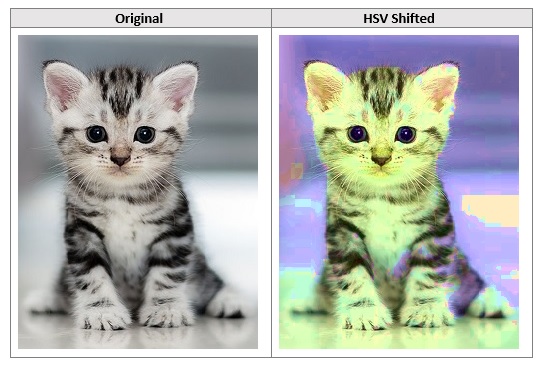

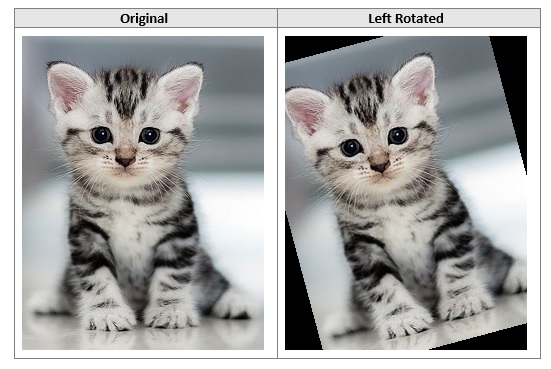

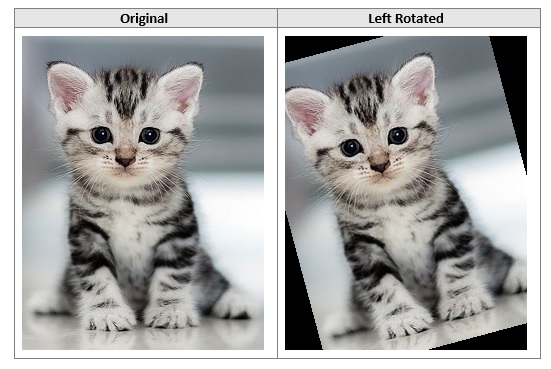

While the techniques described above are “deep” in the sense that they are more complex than their predecessors, these should not be confused with the whole different area of “Deep Learning” which, as of today, is finding more applications in the AI/Computer Vision spaces. While the “Deep Learning Models”, esp. Deep Convolutional Network, can be trained on structured data to solve the usual business use cases, they are mostly employed in the areas of image classification/recognition and image feature learning. One of the reasons behind Deep Learning not making headway into regular business use cases is because they are more resource intensive to develop, implement and maintain. These typically require advanced GPUs for development and may not be worthy for a regular business use cases unless justified by the ROI due to increased accuracy. However, a few organizations (non-tech) have started using them to develop non-AI predictive use cases because of accuracies offered that translated into higher ROIs.

While most organizations favored off the shelf products like Tableau, QLikview, ELasticSearch Kibana etc., many are also adopting open source technologies like D3 and Angular as a low-cost option to develop customized and visually appealing and interactive web as well as mobile dashboards. The library offers several reusable components and modules which make development fast.

With the advancements in both technology and algorithm fronts as well as the variety of business use cases asked by the organization, data science firms started looking for open-minded thinking, fundamental programming techniques, and basic mathematical skill sets. People with such skills are not only agile at solving any business problem but also flexible in learning new and evolving technologies. It is far easier for such data scientists to not only master R or Python but also quickly go over the learning curve for any emerging technology.

THEN > NOW > LATER

Given the current data science trends, the ongoing R&D and more importantly some of the use cases that businesses have already started asking about, the future of data science would be heavily focused on 3 things – Automation, Real Time Data Science Development (not scoring) aka the Embedded Data Science and obviously “Bigger Data”. This should spark the need and emergence of new data science paradigms in the areas of database handling, programming ecosystems, and newer algorithms. More importantly, it will become critical for data scientists to be constantly aware of the ongoing R&D and proactively learn the emerging tools and techniques and not just play a catch-up game – something that’s not good for their career.

Amongst the technologies already in use, Scala, Python, Pyspark and the Spark Ecosystem should remain the main choice of technology for the coming 2-3 years at least. Julia hasn’t picked up much steam in the mainstream data science work, but is a fairly useful option due to its similarity with python and offering better execution speeds on the single threaded system for a good number of use cases. However, Julia may require more development and improvement of libraries before it really starts getting adopted as one of the default choices.

One of the main bets, however, would be Google’s Go programming language. One of the main advantages that GOlang offers is that it enables data scientists to develop “production ready” data science codes/services/applications. The codes written on single-threaded languages are typically very hard to production coupled with the huge amount of effort required to transition the model from the data scientist’s machines into a production platform (testing, error handling and deployment). Go has performed tremendously well in production allowing the data scientists to develop scalable and efficient application right from the beginning than a heavyweight python based application. Also, its ability to handle and report errors seamlessly ensures that the integrity of the application is maintained over time.

On the algorithm front, we hope to see more development and adoption in the areas of Deep Learning for regular business problems. As explained before, most of the applications of Deep Learning as of today are in the areas of image recognition, image feature analysis, and AI. While this area will continue to develop and we will see highly innovative use cases, it will be great to use these algorithms to solve regular business use cases. We should also see more adoption in the areas of boosting based algorithms – traditionally these required meticulous training (in order not to overfit) and a large amount of time due to a huge no. of iterations. However, with the latest advancements like XGB and LightGBM, we can expect to see more improved versions of boosting as well as increased adoption.

The area of “Big Data Visualization” would see more adoption. Interactive Visualizations developed on the top of streaming data would require a versatile skillset. Building NodeJS and AngularJS applications on the top of Spark (Spark streaming, Kafka) and tapping into the right database (mongo or Cassandra) would remain one of the viable options. Apache Zeppelin with Spark should also see more adoptions especially as it continues to be developed.

The data science industry is evolving at an exponential space – whether it’s the type of use cases it is addressing or the shift and availability on the tool/technology space. The key to a successful data science practice would be to hire individuals who are constantly aware of the R&D in tool/tech space and not afraid to embrace a new technology. Like a good forecast model, a good data scientist should evaluate available inputs (i.e. tech options already in use or under dev) and predict what’s be the best option(s) for tomorrow.